The Information Commissioner’s Office (ICO) has unveiled its Internal AI Use Policy — a 30-page internal guide on how Britain’s data watchdog intends to “responsibly” embrace artificial intelligence.

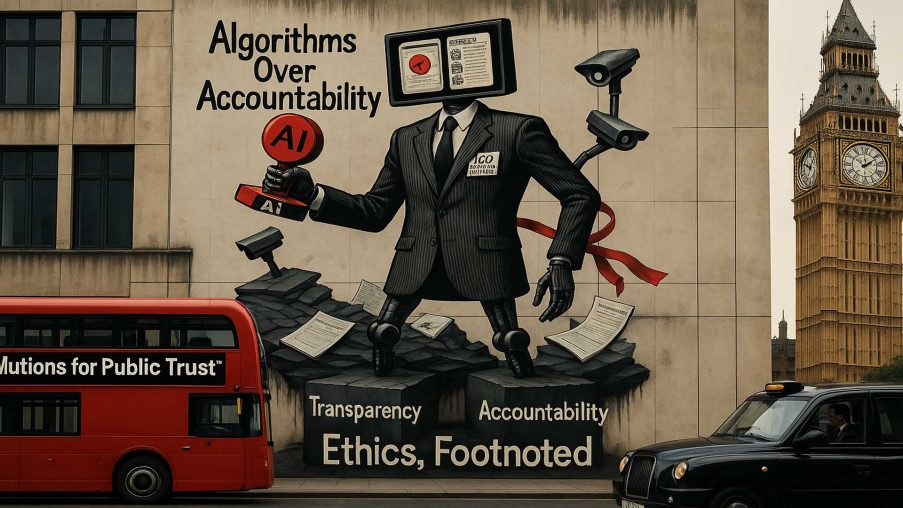

It’s full of noble intentions: transparency, fairness, governance, accountability. But beneath the polished language lies a painful irony. This is a regulator that has failed to regulate — a body paralysed by bureaucracy, now claiming moral authority to lead the charge on AI ethics.

The Watchdog That Couldn’t Bite, Now Wants to Code

In his foreword, Chief Executive Paul Arnold declares himself “an enthusiastic daily user” of AI tools, praising their “astonishing pace” and “positive impact.”

It’s a remarkable statement from the head of an organisation better known for delay than for innovation. For years, the ICO has been criticised for its glacial response times, its reluctance to tackle systemic data breaches, and its impotence in the face of government misuse of personal data.

Now it wants to pioneer responsible AI.

Governance by Template: Bureaucracy as a Substitute for Control

The policy is a bureaucrat’s paradise — packed with annexes, checklists, and flowcharts. There’s an “AI Screener,” an “AI Use Case Specification,” an “AI Inventory,” and no shortage of boards and committees to review them.

It’s an illusion of governance — a paper shield against accountability. The ICO tells its own staff to “mark AI-generated text” with footnotes, ensure “human review,” and conduct impact assessments. But it offers no detail on how any of this will actually be enforced, or by whom.

The irony is sharp: a regulator that cannot hold others to account now trusts itself to self-certify compliance.

Ethics for Optics

The policy warns against using AI in any way that may cause “significant risk of harm to individuals, groups or the reputation of the ICO.”

That last phrase is revealing. The priority is not the public — but the regulator’s own image. Ethics have become performance art: safety defined not by outcomes, but by optics.

This is the same ICO that refuses to disclose complaint outcomes, hides behind legal privilege to avoid scrutiny, and routinely redacts responses under the Freedom of Information Act. Transparency, it seems, is always for someone else.

Automation Without Accountability

The ICO speaks of “accountability for AI governance” as though this were something new. Yet accountability has been missing from its own operations for years. Individuals who complain about data breaches face months of silence. Fines against multinationals are little more than rounding errors. Enforcement action has plummeted while corporate non-compliance has soared.

Against that backdrop, the notion that the ICO can responsibly automate itself verges on satire.

Artificial Intelligence, Genuine Hypocrisy

The ICO’s AI policy is less a statement of ethics than a confession of insecurity — a glossy internal memo designed to make a fading regulator look futuristic.

It references every conceivable standard — ISO certifications, government playbooks, data-ethics frameworks — as if citations could replace credibility. But no number of footnotes will disguise the truth: the ICO cannot regulate the present, let alone the future.

The Final Irony

When a regulator that has lost public confidence starts boasting about its AI strategy, the question isn’t how it will regulate the technology — it’s how the technology will regulate it.

The ICO has spent years barking at the powerless and bowing before the powerful. Now, armed with AI, it risks becoming something even worse: a digital pantomime of oversight, run by a machine that believes its own press releases.

Legal Disclaimer

This article represents the author’s independent analysis and opinion based on publicly available information, including the Information Commissioner’s Office’s Internal AI Use Policy (August 2025). It is published in the public interest to promote transparency, accountability, and informed debate on matters of regulatory governance.

No statement herein should be interpreted as alleging misconduct by any individual unless expressly stated and supported by evidence. References to organisations, officials, or policies are made in the context of critical commentary and fair reporting as permitted under sections 30 and 31 of the Defamation Act 2013 and Article 10 of the European Convention on Human Rights (freedom of expression).

Legal Lens is an independent publication. It is not affiliated with, endorsed by, or acting on behalf of the Information Commissioner’s Office or any public authority. Readers are encouraged to verify cited sources and form their own conclusions.